引言

基于上一篇文章,我们已经创建了3台虚拟机,本文手把手教你如何搭建k8s集群。

环境准备

1、3台虚拟机:192.168.1.211、192.168.1.212、192.168.1.213

2、连通公网环境

3、确保每台虚拟机都有足够大的空间,比如作者本人是每台虚拟机100GB磁盘空间

环境准备(所有节点执行)

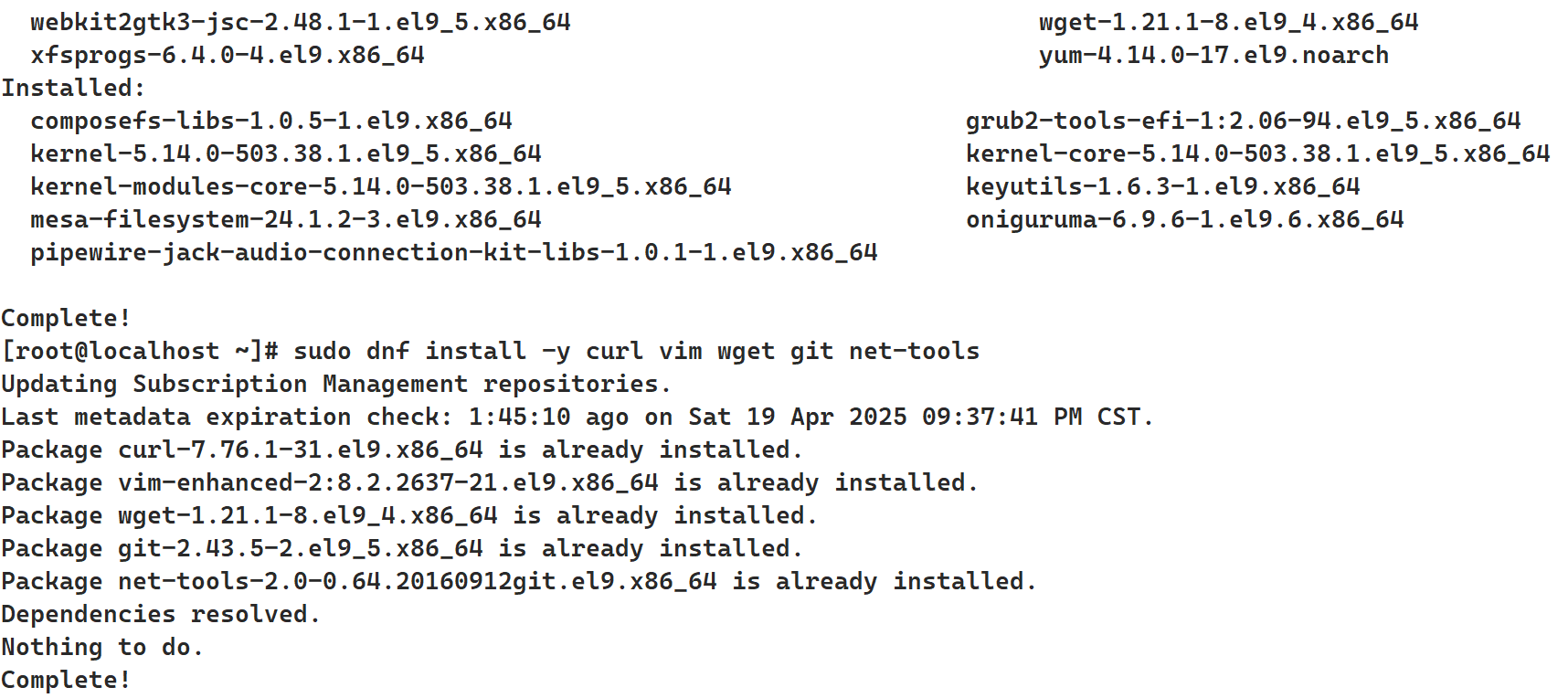

更新系统并安装依赖

sudo dnf update -y

sudo dnf install -y curl vim wget git net-tools如下图,

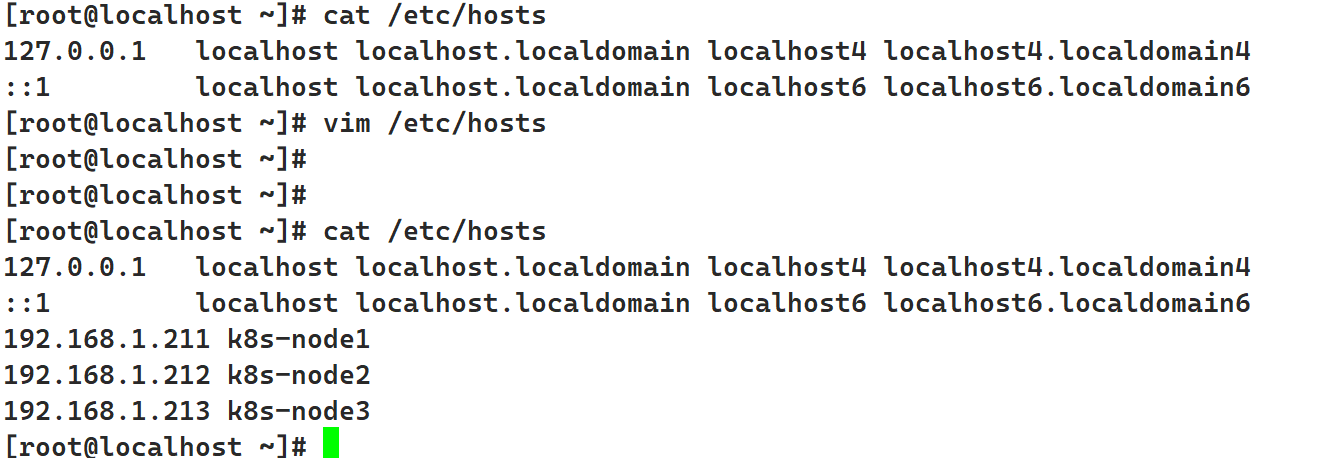

配置hosts文件

vim /etc/hosts 编辑文件,新增以下内容

192.168.1.211 k8s-node1

192.168.1.212 k8s-node2

192.168.1.213 k8s-node3

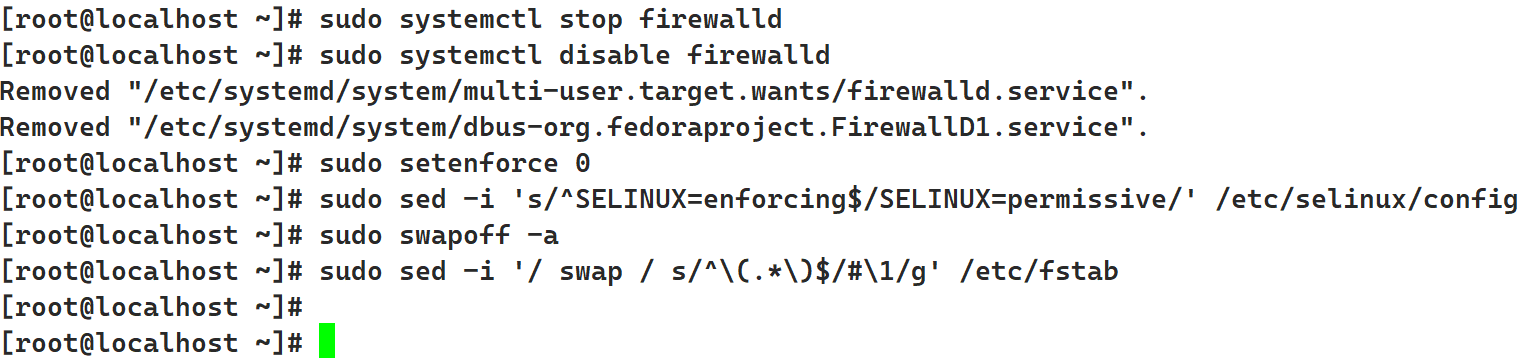

关闭Swap和SELinux、防火墙

sudo systemctl stop firewalld

sudo systemctl disable firewalld

sudo setenforce 0

sudo sed -i 's/^SELINUX=enforcing$/SELINUX=permissive/' /etc/selinux/config

sudo swapoff -a

sudo sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

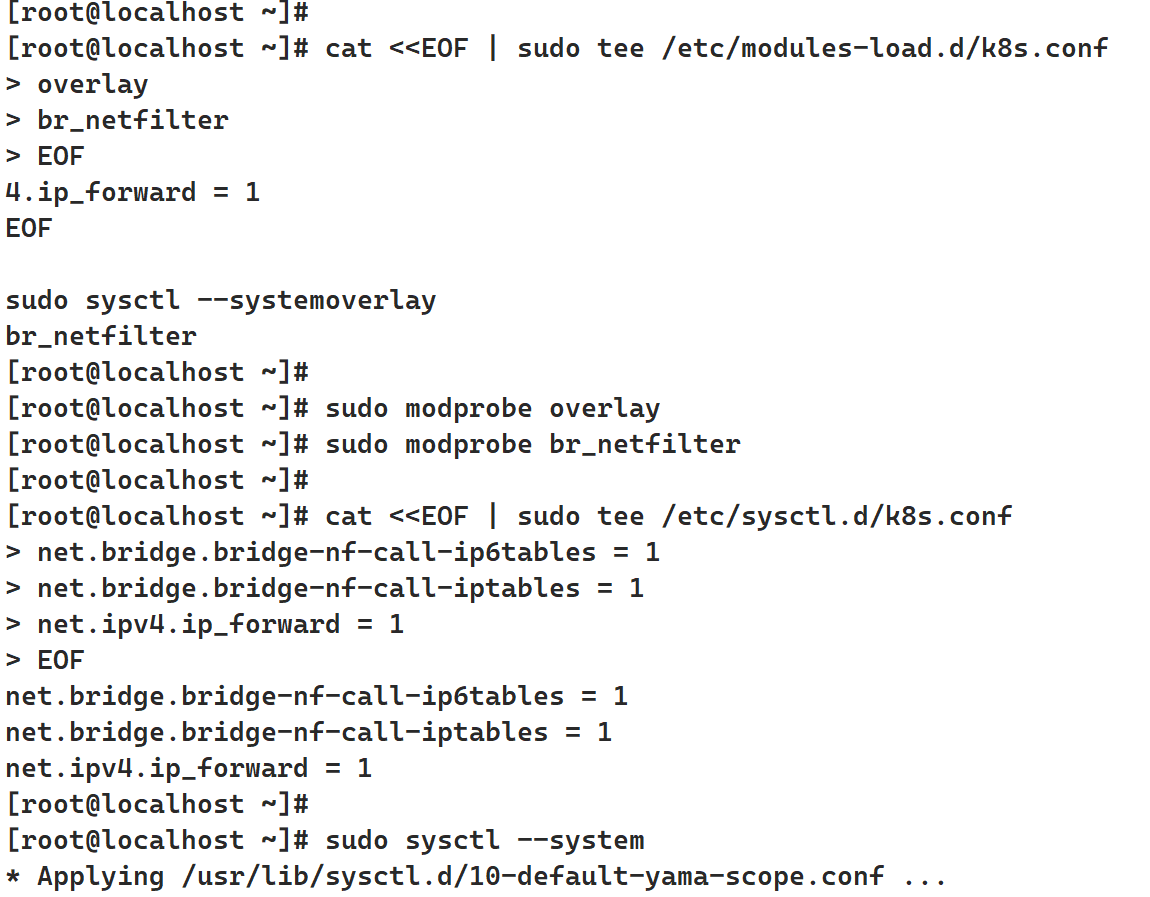

配置内核参数

cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

overlay

br_netfilter

EOF

sudo modprobe overlay

sudo modprobe br_netfilter

cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

EOF

sudo sysctl --system

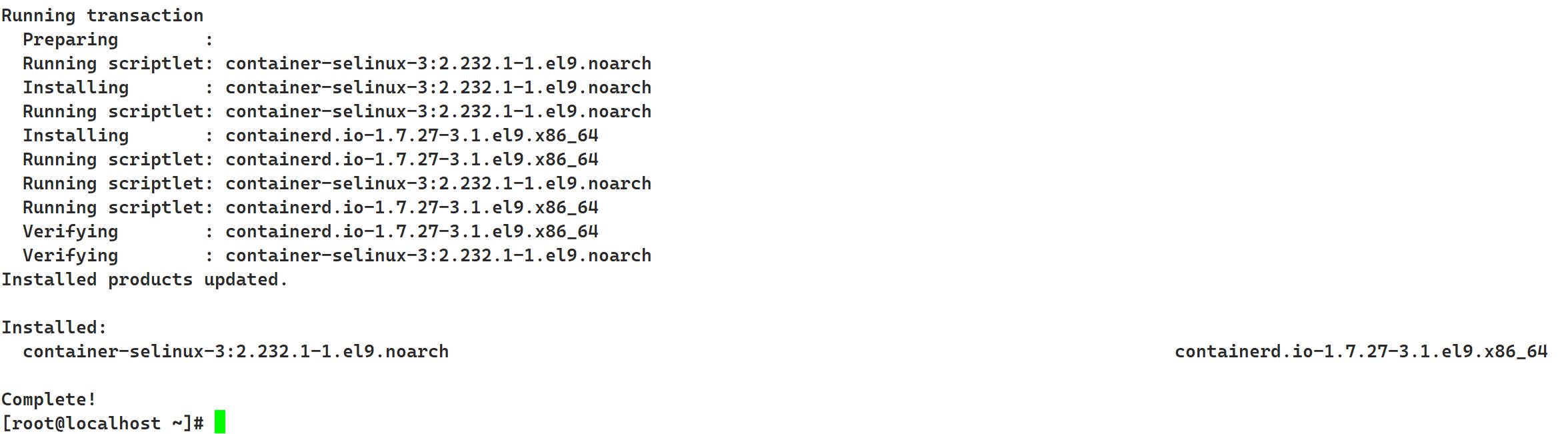

安装containerd(所有节点)

安装containerd

sudo dnf config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

sudo dnf install -y containerd.io

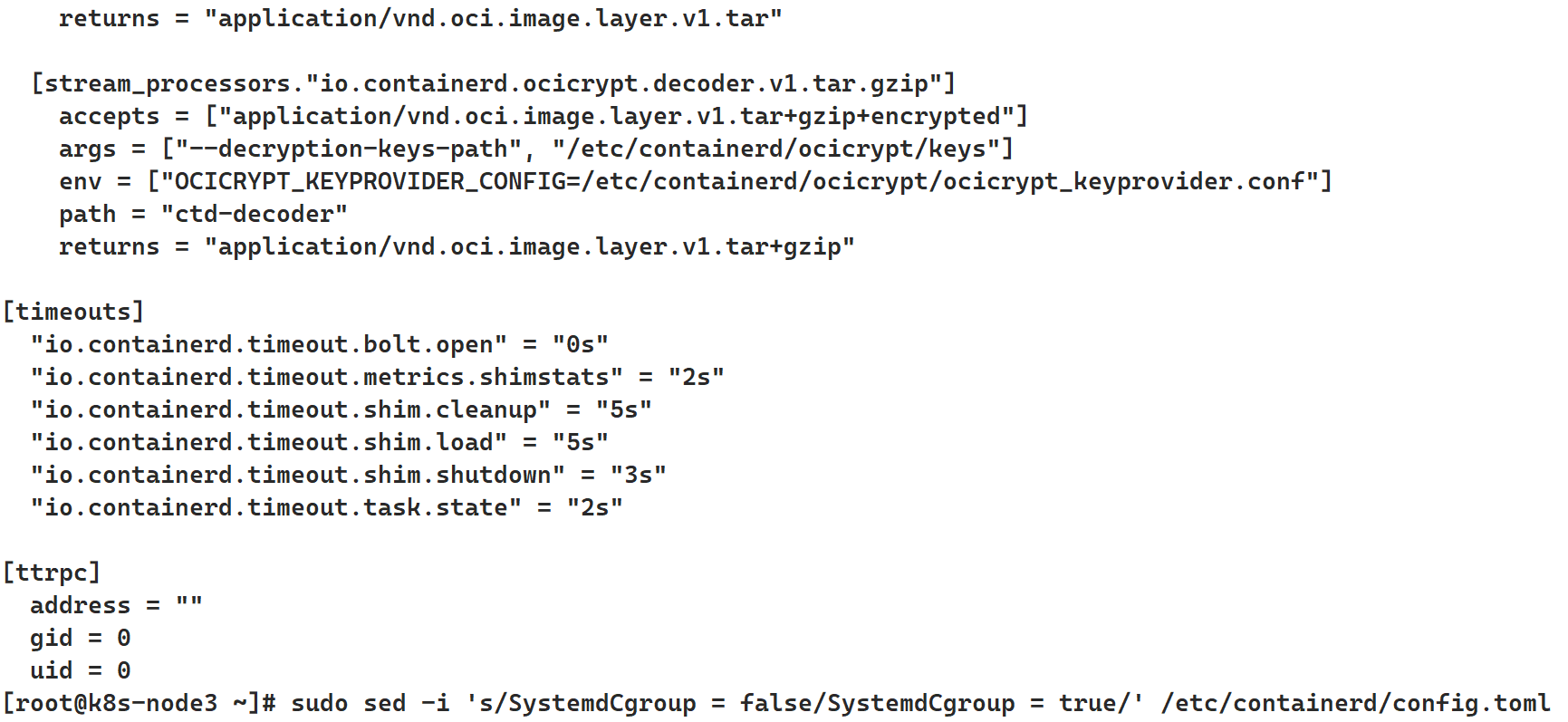

配置containerd

sudo mkdir -p /etc/containerd

containerd config default | sudo tee /etc/containerd/config.toml

# 编辑/etc/containerd/config.toml,找到SystemdCgroup选项并设置为true:

sudo sed -i 's/SystemdCgroup = false/SystemdCgroup = true/' /etc/containerd/config.toml

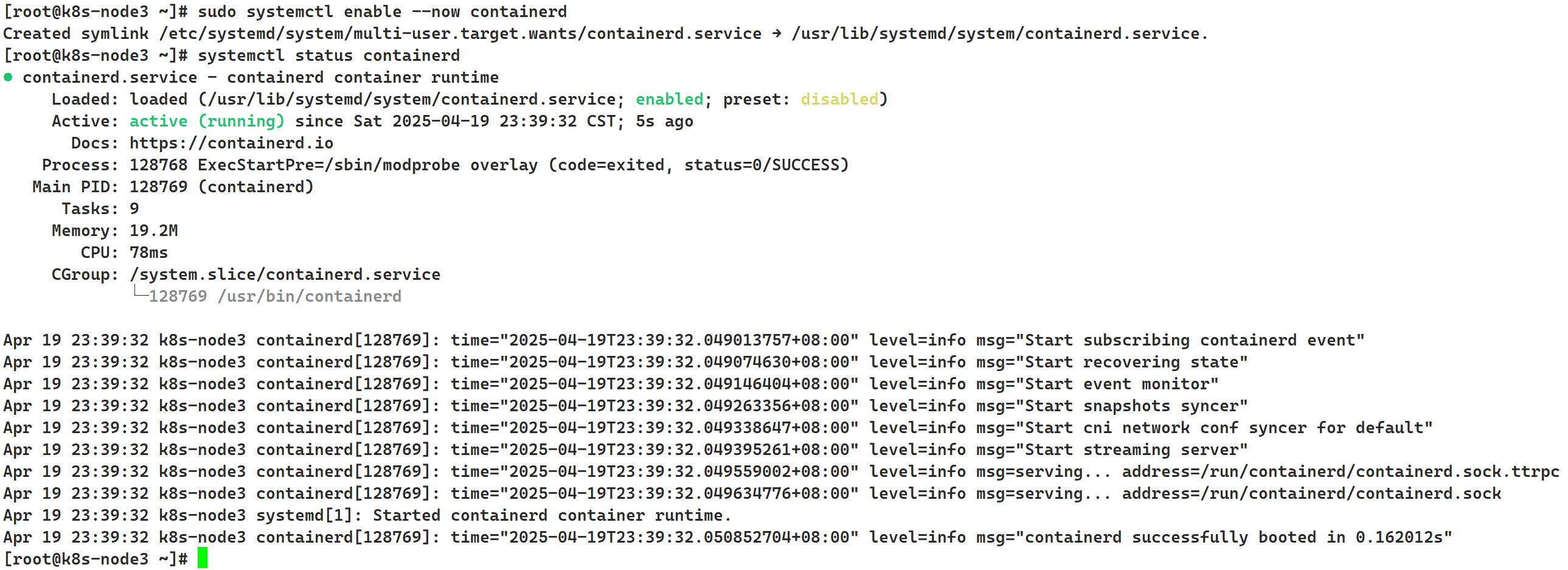

启动containerd

sudo systemctl enable --now containerd

# 启动成功查看状态

systemctl status containerd

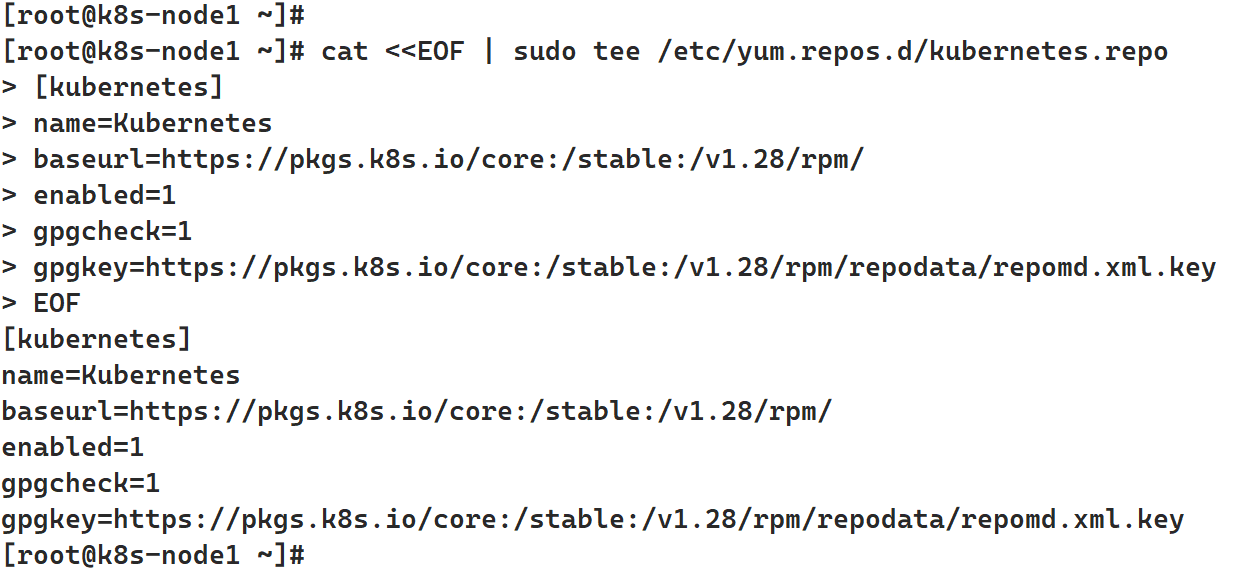

安装Kubernetes组件(所有节点)

添加Kubernetes仓库

cat <<EOF | sudo tee /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://pkgs.k8s.io/core:/stable:/v1.28/rpm/

enabled=1

gpgcheck=1

gpgkey=https://pkgs.k8s.io/core:/stable:/v1.28/rpm/repodata/repomd.xml.key

EOF

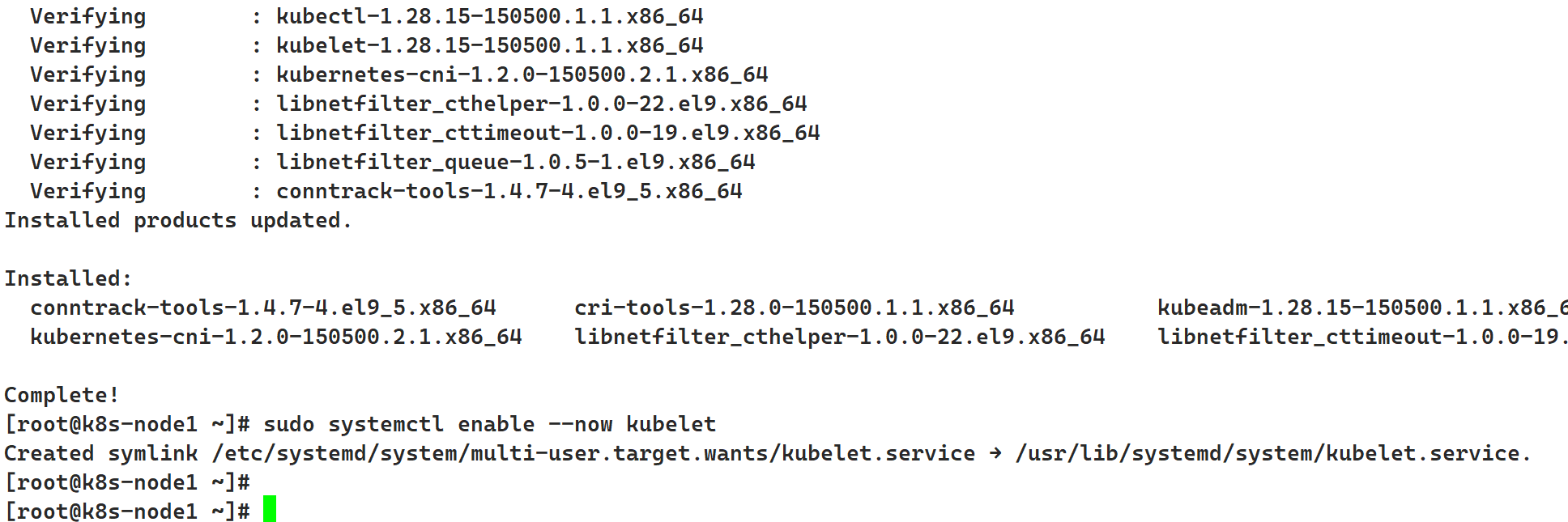

安装kubelet、kubeadm和kubectl

sudo dnf install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

sudo systemctl enable --now kubelet

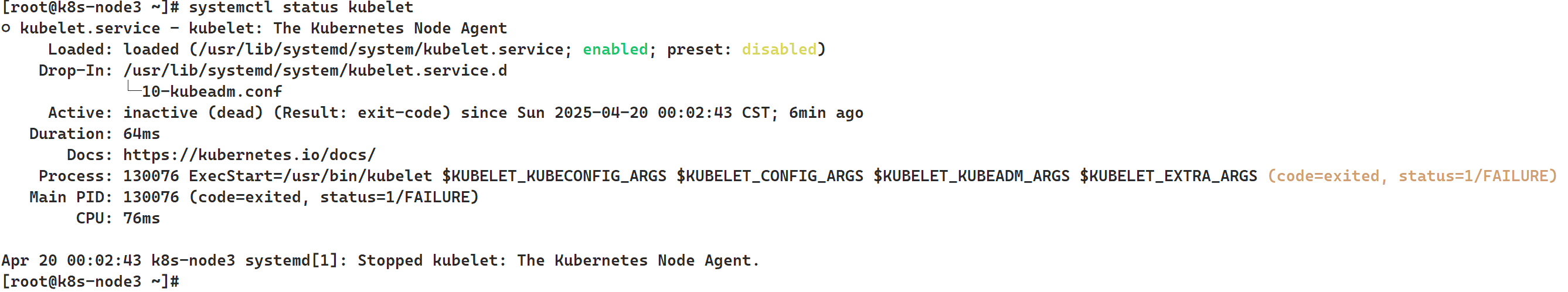

如下图,可以看到每个节点的kubelet状态都是FAILURE,无需担心,在还 没有执行 kubeadm init(Master)或 kubeadm join(Worker) 之前,kubelet 是 没有配置文件 /var/lib/kubelet/config.yaml 的,它就会报这个错,可以忽略这个错误,继续往下走

初始化Kubernetes集群

在所有节点上执行

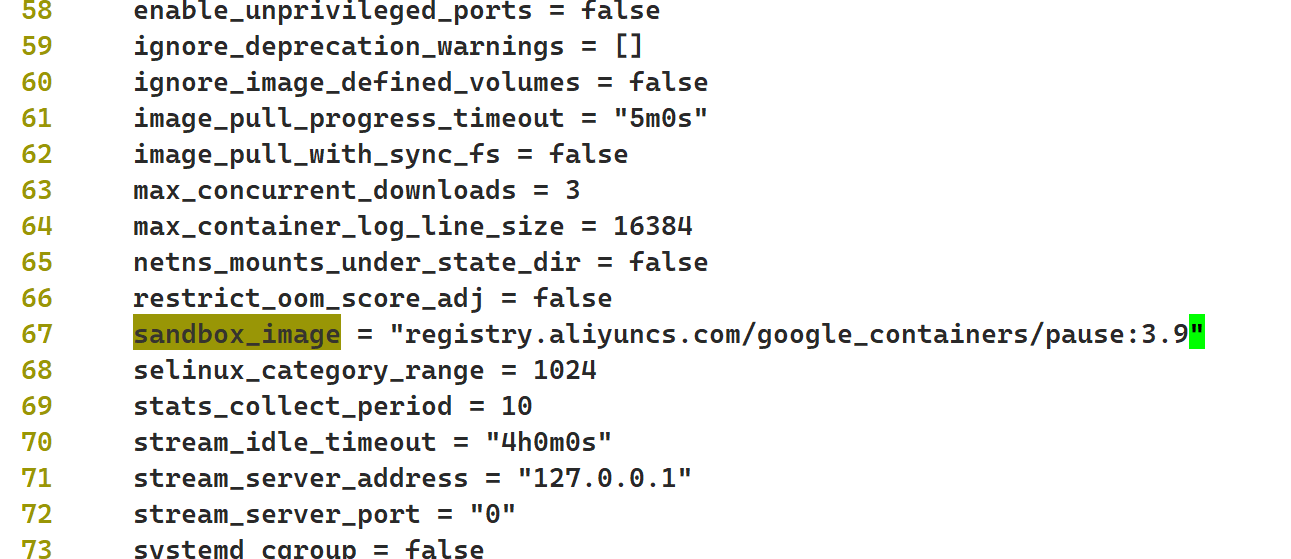

执行vim /etc/containerd/config.toml,将sandbox_image = "registry.k8s.io/pause:3.8"改为 sandbox_image = "registry.aliyuncs.com/google_containers/pause:3.9"

如下图,是在67行

拉取对应的镜像并打标签(确保镜像已缓存)

# 拉取

ctr images pull registry.aliyuncs.com/google_containers/pause:3.9

# 打标签为 registry.k8s.io 的格式

ctr images tag registry.aliyuncs.com/google_containers/pause:3.9 registry.k8s.io/pause:3.9

sudo systemctl restart kubelet在主节点(k8s-node1)执行初始化

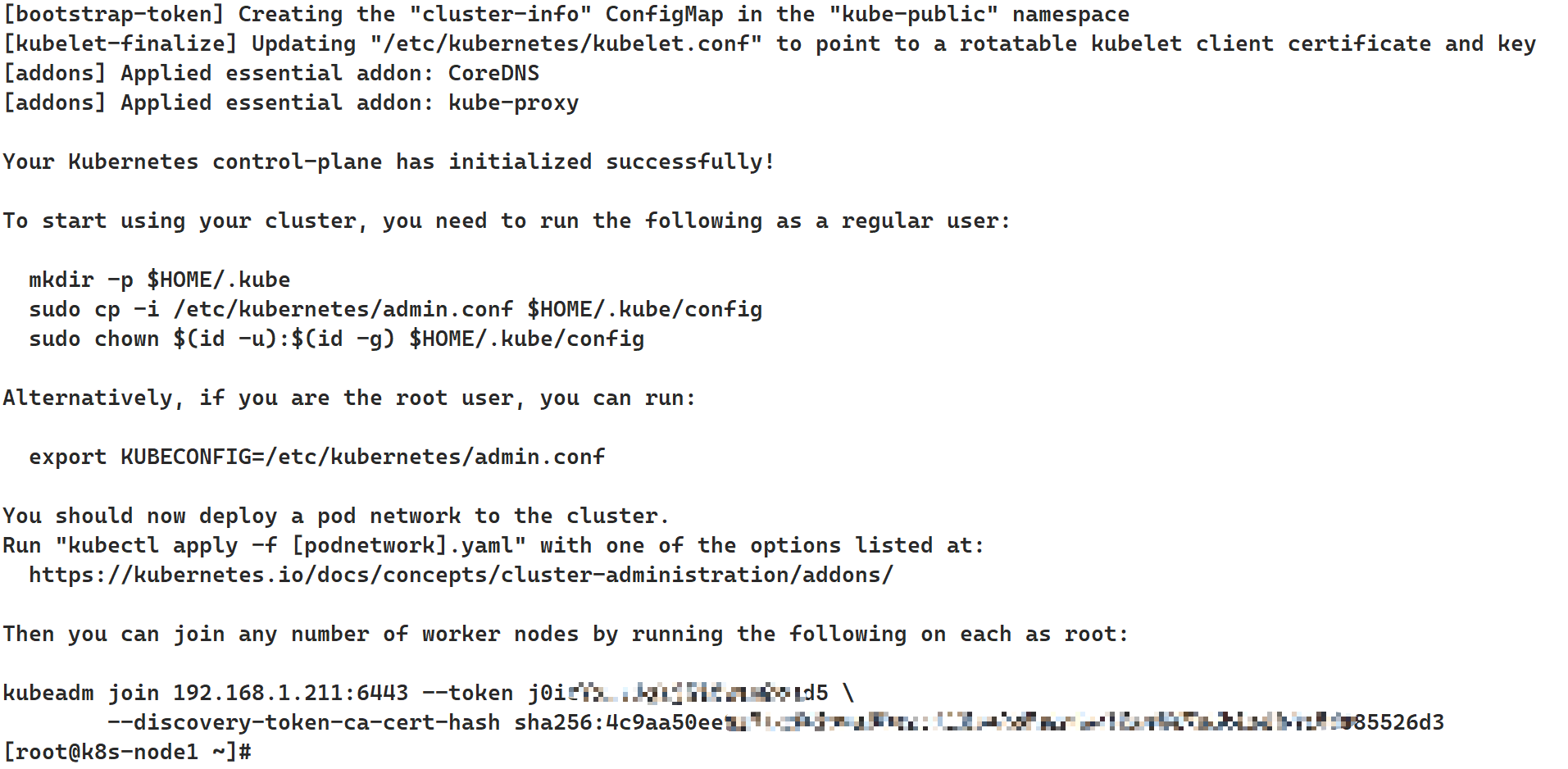

初始化

kubeadm init \

--apiserver-advertise-address=192.168.1.211 \

--image-repository registry.aliyuncs.com/google_containers \

--kubernetes-version v1.28.15 \

--pod-network-cidr=10.244.0.0/16如下图,初始化成功,记录下最后两行的token和hash码

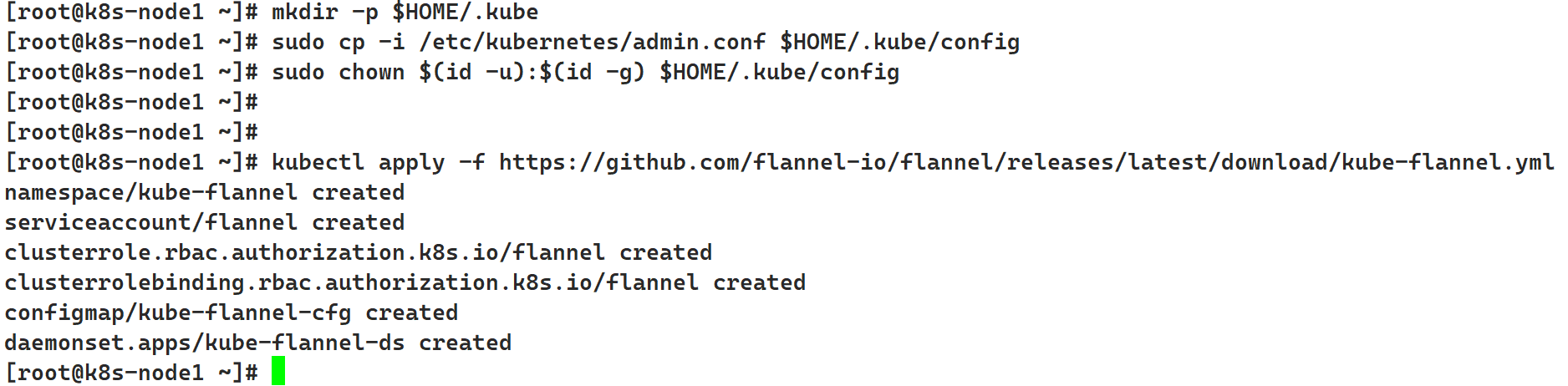

配置kubectl并安装网络插件flannel

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

kubectl apply -f https://github.com/flannel-io/flannel/releases/latest/download/kube-flannel.yml

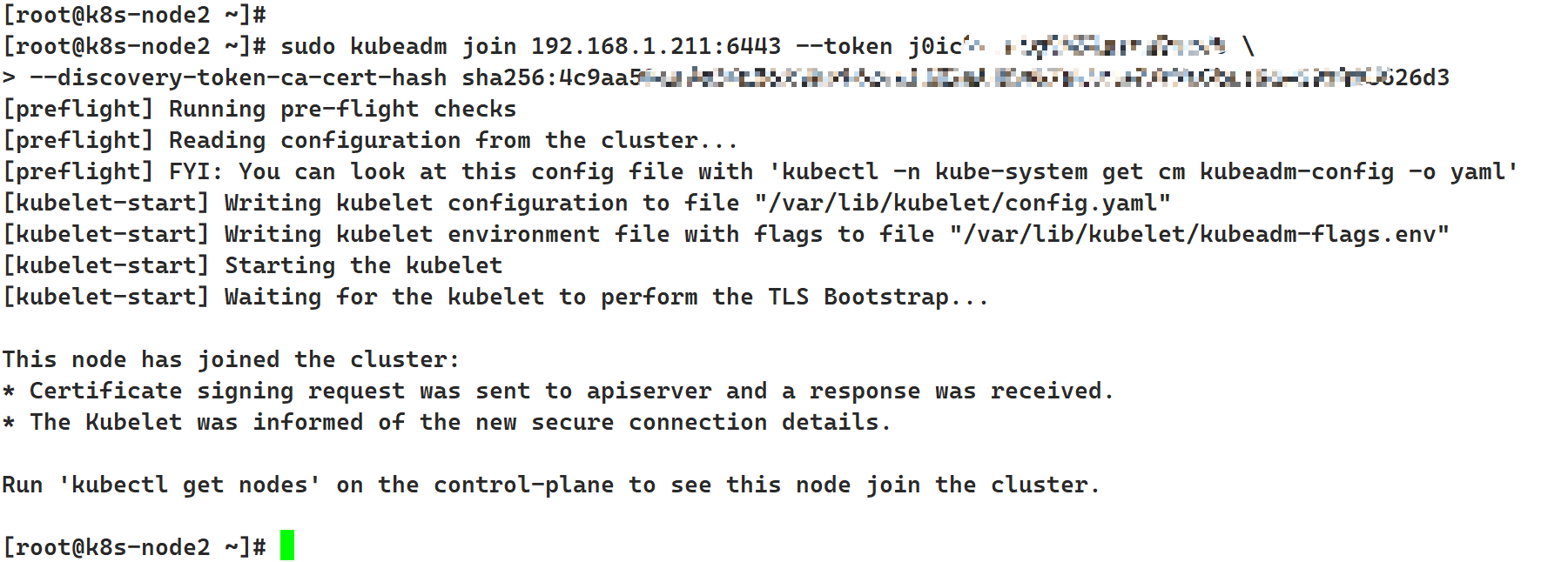

node2和node3加入集群

sudo kubeadm join 192.168.1.211:6443 --token j0ixxxxxxxxxxxxxxd5 \

--discovery-token-ca-cert-hash sha256:4c9xxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxxx4185085526d3

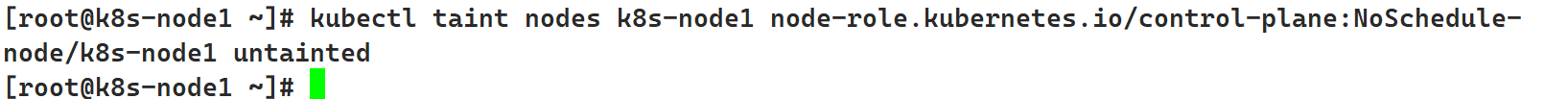

允许主节点调度Pod

默认情况下,主节点不调度工作负载。如果需要允许主节点调度Pod,执行:

kubectl taint nodes k8s-node1 node-role.kubernetes.io/control-plane:NoSchedule-

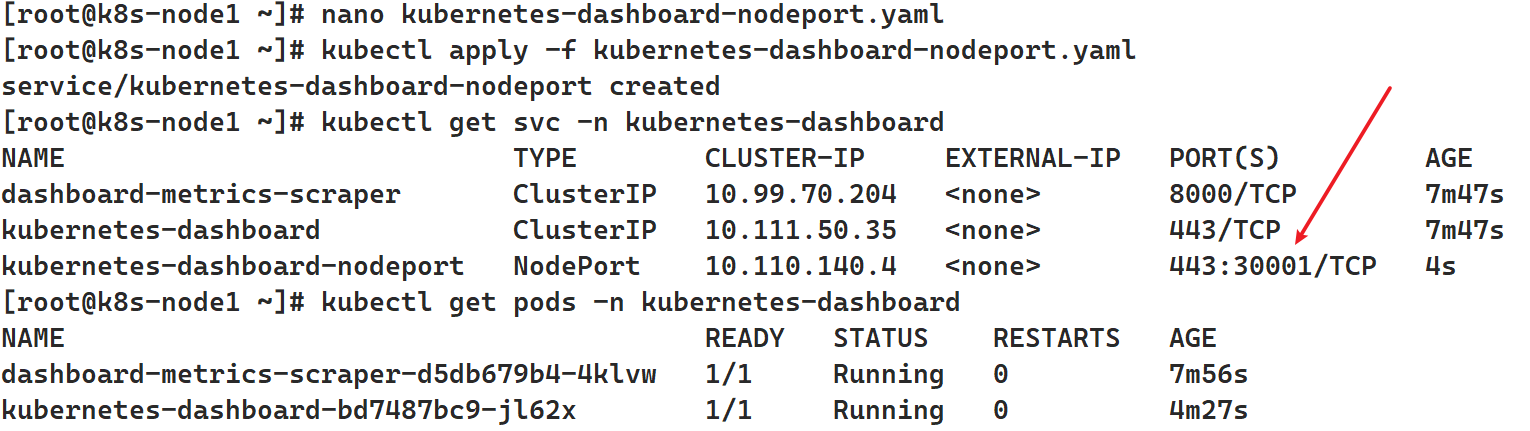

安装 Kubernetes Dashboard管理web

# 使用国内镜像安装

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Namespace

metadata:

name: kubernetes-dashboard

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: kubernetes-dashboard

namespace: kubernetes-dashboard

labels:

k8s-app: kubernetes-dashboard

spec:

replicas: 1

selector:

matchLabels:

k8s-app: kubernetes-dashboard

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

spec:

containers:

- name: kubernetes-dashboard

image: registry.cn-hangzhou.aliyuncs.com/google_containers/kubernetes-dashboard:v2.7.0

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8443

protocol: TCP

args:

- --auto-generate-certificates

- --namespace=kubernetes-dashboard

volumeMounts:

- name: kubernetes-dashboard-certs

mountPath: /certs

- name: tmp-volume

mountPath: /tmp

livenessProbe:

httpGet:

scheme: HTTPS

path: /

port: 8443

initialDelaySeconds: 30

timeoutSeconds: 30

volumes:

- name: kubernetes-dashboard-certs

secret:

secretName: kubernetes-dashboard-certs

- name: tmp-volume

emptyDir: {}

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

tolerations:

- key: "node-role.kubernetes.io/master"

operator: "Exists"

effect: "NoSchedule"

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: dashboard-metrics-scraper

namespace: kubernetes-dashboard

labels:

k8s-app: dashboard-metrics-scraper

spec:

replicas: 1

selector:

matchLabels:

k8s-app: dashboard-metrics-scraper

template:

metadata:

labels:

k8s-app: dashboard-metrics-scraper

spec:

containers:

- name: dashboard-metrics-scraper

image: registry.cn-hangzhou.aliyuncs.com/google_containers/metrics-scraper:v1.0.8

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8000

protocol: TCP

livenessProbe:

httpGet:

scheme: HTTP

path: /

port: 8000

initialDelaySeconds: 30

timeoutSeconds: 30

volumeMounts:

- mountPath: /tmp

name: tmp-volume

serviceAccountName: kubernetes-dashboard

nodeSelector:

"kubernetes.io/os": linux

tolerations:

- key: "node-role.kubernetes.io/master"

operator: "Exists"

effect: "NoSchedule"

volumes:

- name: tmp-volume

emptyDir: {}

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: kubernetes-dashboard

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: kubernetes-dashboard

namespace: kubernetes-dashboard

---

apiVersion: v1

kind: Service

metadata:

name: kubernetes-dashboard

namespace: kubernetes-dashboard

labels:

k8s-app: kubernetes-dashboard

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 30001

selector:

k8s-app: kubernetes-dashboard

EOF访问 Dashboard

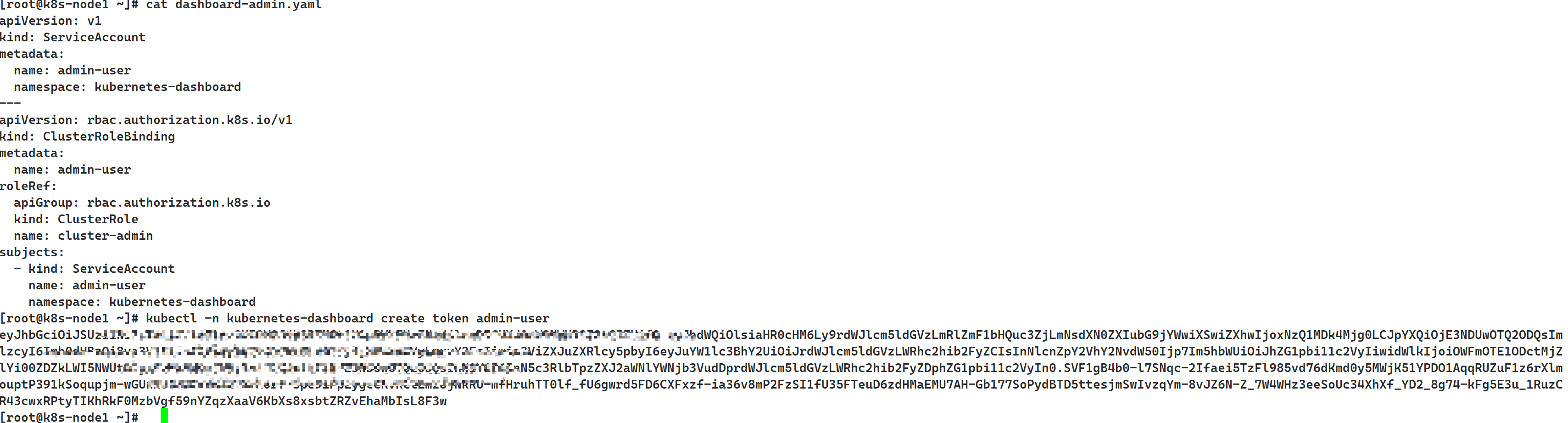

新建dashboard-admin.yaml文件

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

kubectl apply -f dashboard-admin.yaml

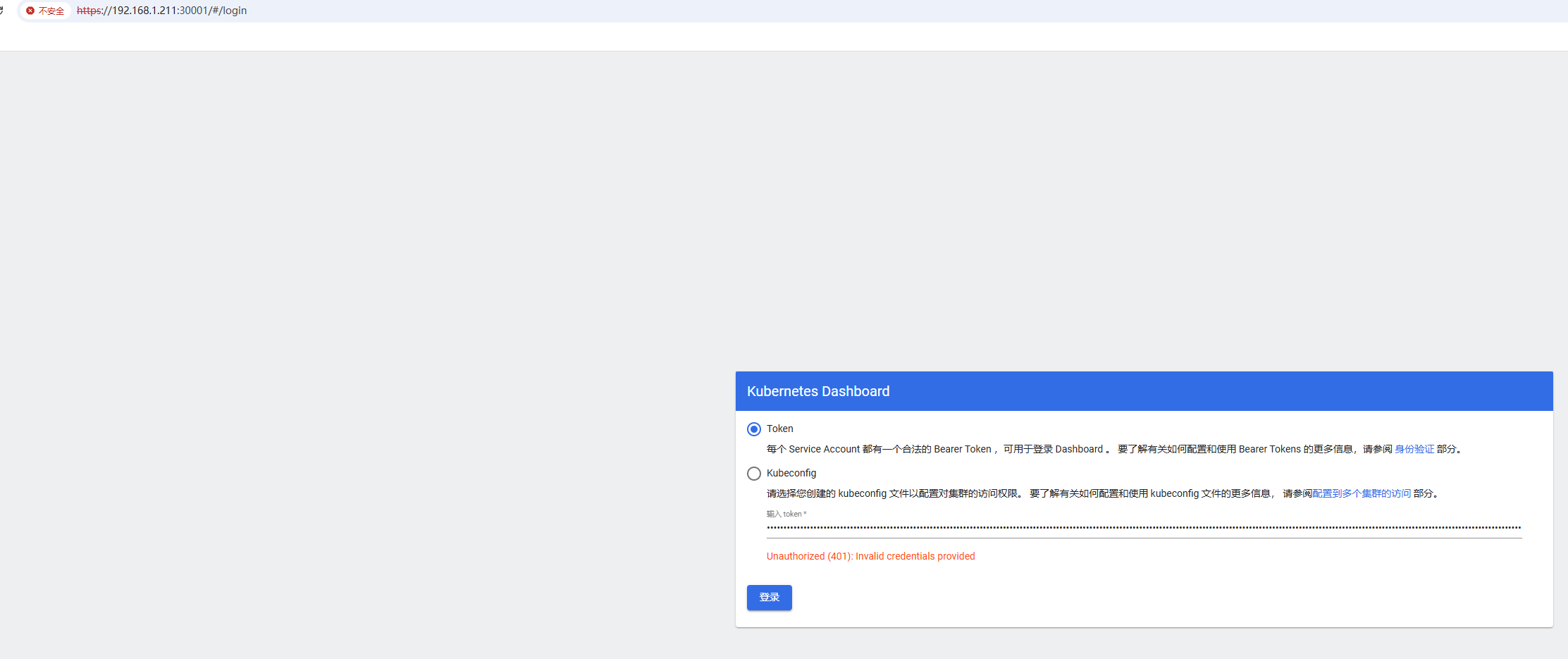

# 获取访问令牌(在控制台输出中复制token)这会直接输出一个长字符串,就是你登录 Dashboard 时使用的 Bearer Token

kubectl -n kubernetes-dashboard create token admin-user

# 启用本地代理访问

kubectl proxy

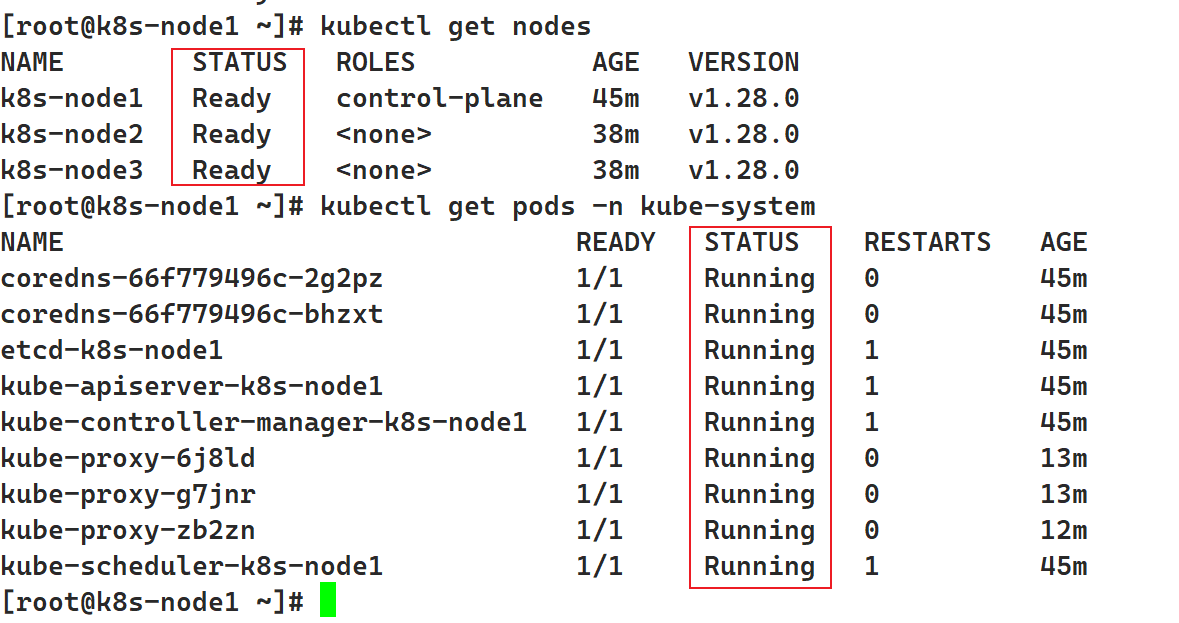

成果检验

如下图安装成功:

kubectl get nodes

kubectl get pods -n kube-system

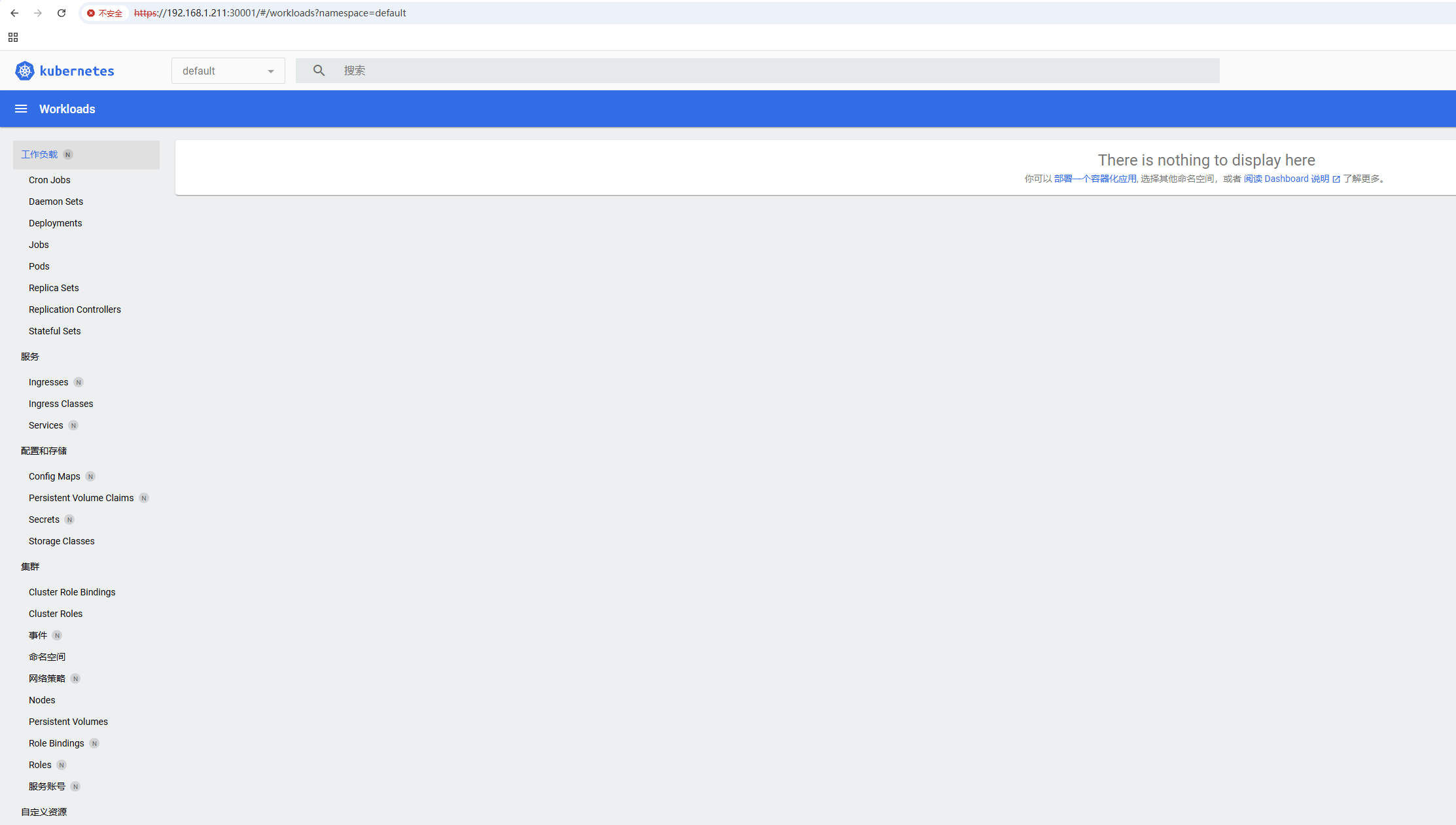

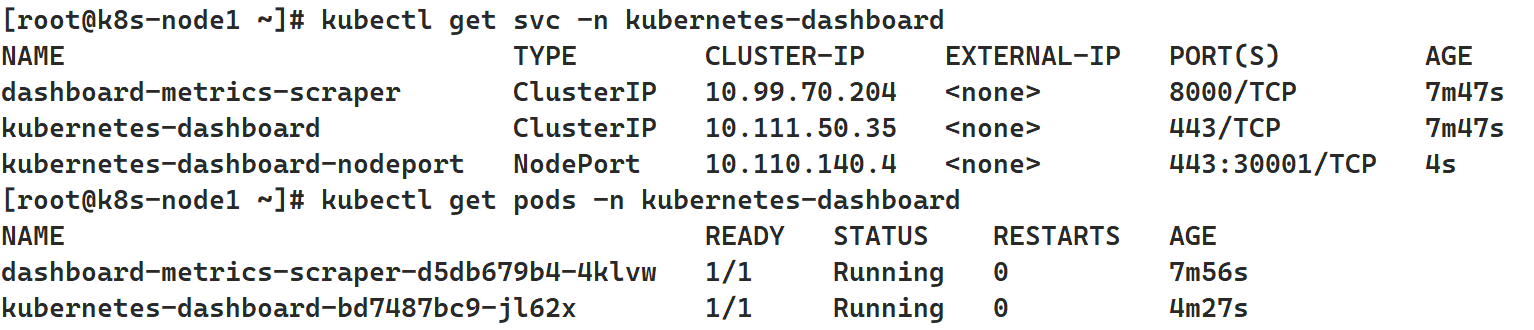

如下图,dashbord安装成功

如下图,web面板访问成功